Editor’s note: The following is a guest post from Rahul Auradkar, EVP & GM, Unified Data Services & Einstein at Salesforce.

Every company has information gaps and bottlenecks. When you boil it down, that’s the real value AI brings businesses: scaling access to information to help people connect more dots.

But what happens when you deploy an AI system, like an AI agent, that’s plugged into a business’s every data source designed to answer questions, proactively surface insights gleaned from hundreds of apps or departments, and take action, whether or not a human has asked it?

You get an AI agent that can potentially supercharge productivity — and become a major security risk.

OK, cut the doomsday music — this problem isn’t entirely new. After all, humans are responsible for nearly 6 in 10 security breaches.

But AI agents, powered by enterprise data from hundreds of apps that can distribute that information at an unprecedented scale, raise concerns to a new level. In fact, Gartner predicts that by 2028, AI agents will be the culprit behind 1 in 4 enterprise security breaches.

As businesses look to adopt and deploy AI agents, they must ensure the nascent technology is deeply integrated with the enterprise data to make the most of its capabilities while also preventing critical data leakage.

When should agents exercise discretion?

For 2025 to be the year agentic AI enters the workforce, an agent must know when and how to hold its “digital tongue” to avoid exposing sensitive data.

Without the proper data governance and access management policies in place for AI agents, they might become non-compliant and create data privacy risks, inadvertently leaking data to users who shouldn’t have access to it.

Many data platform providers offer governance tools that can help with data security, accuracy and integrity — but their limitations are significant.

These solutions are also often technical and complex because they were built with analysts and data scientists in mind, not marketers, sales or service professionals who are increasingly using AI agents to augment their work.

Because most of these data platforms weren’t built for business users, they’re also typically disconnected from business workflows — the CRMs and enterprise apps where they work every day. This disconnection means AI agents lack the business context to understand when and how to shield certain data such as customer information or sensitive research.

Without that context and adequate governance policies, businesses risk data non-compliance, data breaches, and misuse of AI, undermining trust and slowing innovation.

Strict data governance and AI policies will soon be table stakes. Gartner predicts that by 2027, governments worldwide will enact AI governance laws, and trust, risk and security management capabilities will be the primary differentiators of AI offerings.

How to establish governance controls

What makes AI agents so powerful is not just that they can take action autonomously like responding to customer questions 24/7 or booking sales meetings — it’s also that they’re grounded in an enterprise’s data. That allows agents to personalize customer interactions or recommend next steps for human workers based on a customer’s full journey with the brand.

But access to this breadth of enterprise data also means organizations must establish access management policies to ensure that agents and their users only access data they are entitled to see.

Fortunately, AI is not much different from the data management practices businesses have been building for years. Policy-driven access establishes rules to manage access to both structured and unstructured data for different users and AI agents.

For example, a life sciences company might restrict access to research and development while allowing broader access to marketing information. For companies with footprints in the European Union and the U.S., access policies could prohibit customer data created in the EU from being accessed by American users to comply with the General Data Protection Regulation law.

The technical challenge is implementing consistent data access across an organization at scale.

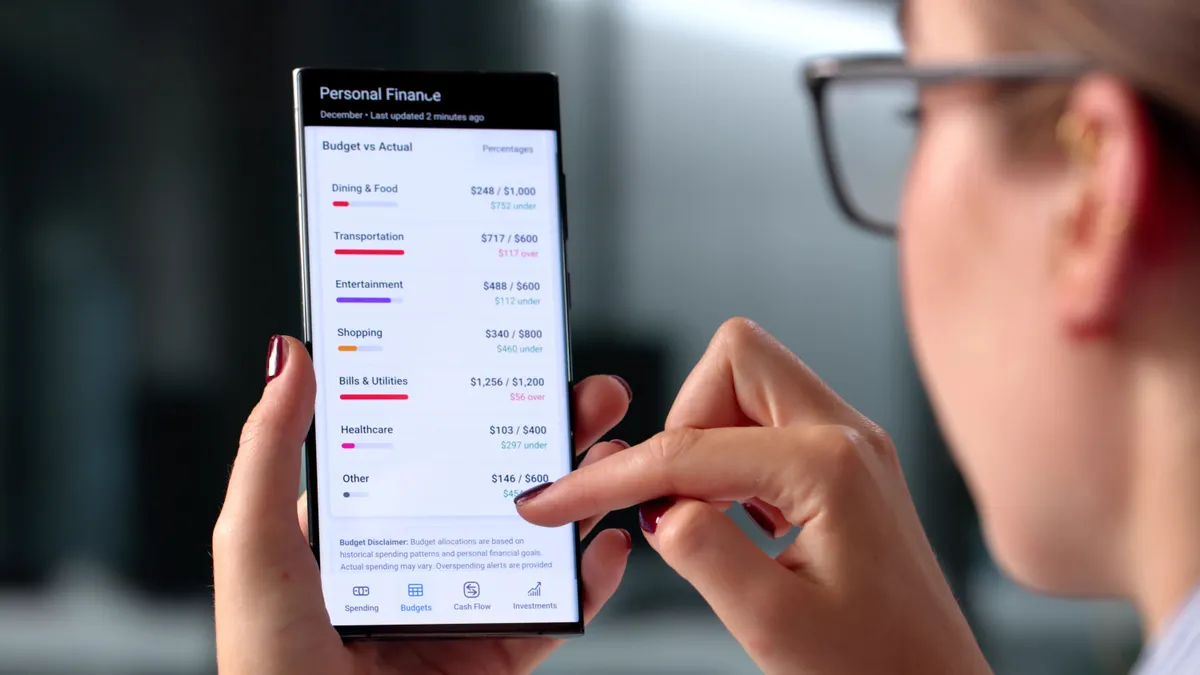

Data blending, or combining data from multiple sources, has never been a bigger job. Nearly half of enterprises manage an average of over 1,000 separate apps, and only 2% of businesses have successfully integrated more than half of their applications, according to MuleSoft’s 2025 Connectivity Benchmark Report.

Siloed data is one of the largest hurdles for IT leaders, and it’s limiting businesses’ ability to scale AI-driven automation and deliver real-time, personalized experiences.

The good news, establishing access policies is easier than ever today. Businesses can create policies with clicks and attach that to users who are, for example, defined in the CRM ecosystem as sales, service or marketing employees. This policy would inform the AI agent what data it surfaces to a user, preventing them from gaining access to information they aren’t authorized to see.

How humans and agents can work together

The potential benefits of AI agents are undeniable. As agents take on routine tasks, human employees are freed up to focus on more creative and strategic priorities.

Soon, multi-agent systems where agents can call other agents or potentially create new ones, will exponentially increase what individuals and organizations can do – ushering in a world where growth is not limited to human output alone.

Of course, this future is only possible if humans trust their agent helpers. Confidence in agent capabilities is essential to spur the agentic AI revolution.

CIOs will have among the most important roles in building confidence in AI systems, shouldering the responsibility of setting up data governance and access policies, and allowing flexibility to tailor the system to their specific use cases and organizational structure.

They will be responsible for ensuring that agents consider where a request originates geographically, who is making it as well as what level of access they’re authorized for, and other contextual factors to prevent data leakage. This empowers CIOs to scale the deployment of agents across their organization with reduced risk.

As human workers increasingly work with autonomous AI agents, providers of this technology must ensure agents know how to censor themselves and prevent security breaches while also being as informative and helpful to their human counterparts as possible.