Editor’s note: The following is a guest post from Heather Gentile, director of product, IBM watsonx.governance Risk and Compliance.

“Shadow” risks are a familiar problem to tech executives, a term assigned to assets or applications that fall outside IT’s range of visibility. For years, data and security professionals have labored to find and protect “shadow data” – sensitive business information stored outside the formal data management system.

More recently, IT professionals wrestled with “shadow AI” – the presence of unsanctioned AI applications within an enterprise’s IT system. Now, a new shadow risk is on the horizon: shadow AI agents.

As generative AI technology advances, businesses are eagerly experimenting with its latest iteration: AI agents that can make decisions, use other software tools and autonomously interact with critical systems. This can be a major boon – but only if businesses have the proper AI governance and security in place.

AI agents’ autonomy is a major selling point. Their ability to pursue goals, solve complex tasks and deftly maneuver across tech environments unlocks major productivity gains.

Time-consuming tasks that once required humans, like troubleshooting IT issues or shepherding HR workflows, can be expedited with the help of agents.

AI agents’ accessibility is another advantage. These tools use advanced natural language processing, so a diversity of workers – not just software developers and engineers – can give agents new use cases or workstreams.

However, this same autonomy and accessibility can also invite risk when not coupled with proper AI governance and security. Since a wider swath of employees can wield AI agents, the chances of someone using these tools without permission or proper training increases.

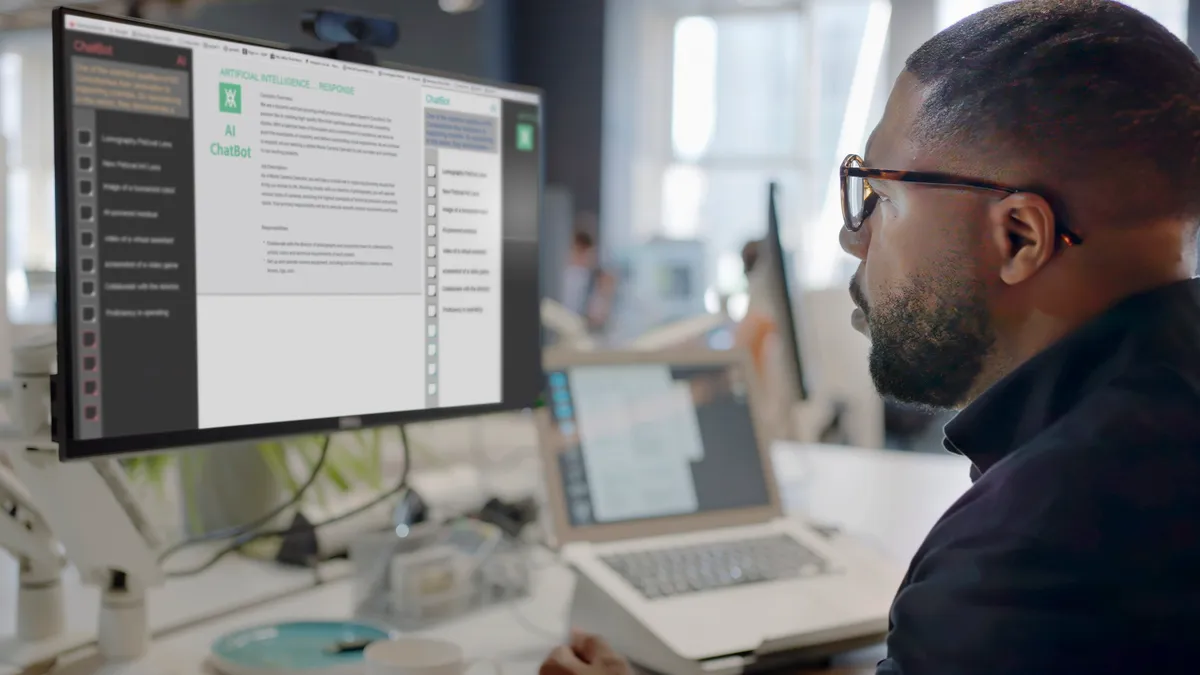

Businesses already experience this problem with AI assistants and chatbots. Workers can feed important company data into unsanctioned, third-party tools – and this data can then be leaked or stolen.

Since AI agents can act unsupervised within key infrastructure, the potential negative impact of shadow configurations also increases. AI agents are vulnerable to the same problems as other AI systems like hallucination, bias and drift.

Potential issues are amplified when an AI system has additional autonomy, leading to irreversible business harm, reputational damage and compliance violations. Shadow AI agents could also complicate existing, multi-agent dependencies and may be more vulnerable to infinite feedback loops that waste enterprise resources.

Gaining control

When shadow data and traditional shadow AI issues emerged, enterprises didn’t halt innovation – they adapted. That should be the strategy in this new era, as well.

The first step for driving out shadows is introducing light. IT professionals need comprehensive visibility into the AI agents in their environment. AI governance and security tools can automatically seek out and catalog AI applications – no more agents lurking in the shadows.

After discovery, the agent must be brought into inventory, where it is aligned with a use case and incorporated into the governance process. Risk assessment, compliance assessment and proper controls and guardrails – all key components of AI governance – are applied to mitigate risk.

Enterprises should also make their agents’ actions traceable and explainable. They should set pre-determined thresholds for toxicity and bias. And they should carefully monitor agent outputs for context relevance, query faithfulness and tool selection quality.

The crux of this strategy is ensuring AI security and governance are deeply integrated disciplines. This collaboration needs to happen at the software level, but also the people level: AI developers and security professionals should talk early and often.