CIOs are heading into the second half of 2025 with a better sense for how AI oversight is shaping up in the U.S., given the Trump administration’s AI Action Plan and the accompanying trio of executive orders.

The roadmap, released late last month, greenlights AI adoption and development acceleration across the country, including measures to expedite AI infrastructure construction and potential access to funds to retrain workers.

Experts say the deregulatory direction is generally giving executives a confidence boost to fast-track strategies, but there are concerns about downstream effects and execution.

“Enterprises are monitoring the messaging coming from the White House, and in some cases, welcoming what would have been perceived as removing barriers,” Jennifer Everett, partner at law firm Alston & Bird, told CIO Dive. “It’s striking the tone from the executive branch, which will flow into the regulatory agencies, but it remains to be determined what that means for enforcement.”

Most organizations are navigating the evolving regulatory landscape while still trying to hit their stride with AI in terms of pursuing the right use cases, securing applications and governing strategies adequately.

Here are five takeaways that CIOs should keep in mind as regulation evolves:

1. Address diverging regulatory frameworks

AI compliance and regulation readiness are part of the initial round of questions enterprise leaders consider in building their broader technology strategy, according to Peter Mottram, managing director in Protiviti’s technology consulting practice.

“If you’re going to operate in certain countries, you have to be able to abide by their standards,” Mottram said. “There’s a variety of different ways to address diverging regulatory standards. Some are technical, but most are processes and deciding the minimum standard.”

Despite the adoption and development acceleration approach, the U.S. is still planning to have AI-related obligations.

Everett pointed to the policy recommendation in the action plan around establishing cybersecurity standards, response frameworks and best practices. Another measure calls for creating an AI Information Sharing and Analysis Center to promote information gathering across critical infrastructure sectors.

“While there is a push for an expansion of AI, conversely, there is also an acknowledgement of appropriate cybersecurity controls in there,” Everett said. “Enterprises need an eye on the downflow of these obligations.”

2. Same show, new cast

Experts have drawn parallels between data privacy legislation and current AI oversight trends.

The focus on data hygiene and process is one such through line, according to Jim Liddle, chief innovation officer of data intelligence and AI at hybrid cloud storage provider Nasuni.

“All of those legislative frameworks focused a lot of companies at the time around data governance and data cleansing,” Liddle said. “There’s a lot of things you need to do [for AI] … and one of them is you need good data governance.”

Organizations that have adjusted their strategies to meet the European Union’s seven-year-old General Data Protection Regulation and similar data privacy laws will potentially be in a better position to navigate the current wave of AI regulation.

“That’s why you have global compliance teams,” Martha Heller, CEO of technology executive search firm Heller. “When it comes to AI, it’s not really different — it’s just data on steroids.”

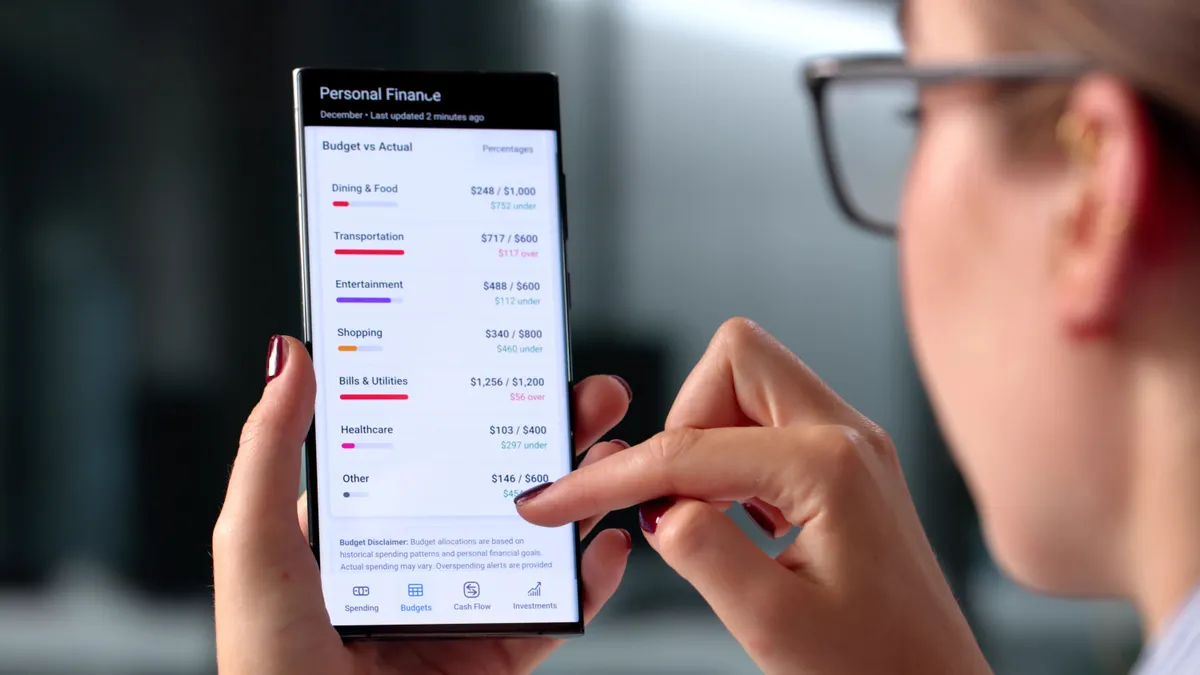

3. Growing focus on evaluation and monitoring technology

The AI Action Plan and one of the three executive orders centers on limiting certain biases within models. Analysts say the measures raise questions and could cause downstream impacts.

“Anybody who works with AI knows that [large language models] have bias,” Liddle said. “LLMs have bias because people have bias and those LLMs ultimately are trained on data and data sets that we don’t really know about.”

Most enterprises do not have robust processes to determine bias, according to Liddle, who cited low technical maturity.

“To prove that [an AI system] is not biased and truthful is going to be a really incredibly difficult thing to do,” Liddle said.

4. Cross-functional collaboration with the C-suite

No AI strategy starts and ends with IT — or at least it can’t anymore. When laying out a plan, the C-suite needs to come together to chart the best path.

“What they need is cross functionality,” Heller said. “We can’t have finance do things this way and marketing does their own thing. We need to have these big functions that used to operate a little autonomously to become one.”

HR, legal, compliance and other departments are enablers of sustainable strategies and can help CIOs to future-proof AI plans.

5. Workforce training and AI skills

CIOs have been thinking about how AI has changed — and will continue to change — workforce dynamics. AI skills come at a premium, and due to the recent skyrocketing of the technology, there isn’t a large talent pool.

While the AI Action Plan fell short of addressing agentic concerns, it broadly acknowledged the skills hurdle, calling for a “rapid retraining.”

“The notion of unfettered deregulation and innovation will be curtailed by other global or international policies, as it always has,” Heller said. “Still, it means potentially you get a grant to support your workforce training, so there’s still good in there.”

Policy recommendations included tax-free reimbursement for employers’ AI training and skills development, as well as federal support for skills initiatives. The exact route the administration will take, however, is yet to be seen.