Last year’s tidal wave of enthusiasm for generative AI adoption could run into a major barrier this year: a scarcity of graphics processing units, the chips that power the nascent technology.

At the center of the vortex of model rollouts, pilot programs, congressional hearings and boardroom drama that followed ChatGPT’s arrival are ongoing rumblings of a potential chip shortage.

During a Senate hearing in May, OpenAI CEO Sam Altman bluntly stated there weren’t enough GPUs. “In fact, we're so short on GPUs,” Altman said, “the less people [that] use our products, the better.”

Cloud providers also struck a cautionary note.

Microsoft listed the GPU supply chain as a risk factor in its July annual report, and AWS raised the issue when it rolled out machine learning-optimized GPU clusters in October. “With more organizations recognizing the transformative power of generative AI, demand for GPUs has outpaced supply,” AWS said in the announcement.

For CIOs, laying the groundwork for AI adoption is more pressing than provisioning GPUs. The preparation process extends from corralling the data, talent and tools needed to fine-tune models, to sorting through the proliferation of cloud-based AI services and SaaS add-ons.

“The constraint of GPUs is real,” Tom Reuner, executive research leader, HFS Research, told CIO Dive. “Yet, the greater issue that enterprise and tech leaders have to crack is the business case of adopting AI.”

Outside of the tech sector, CIOs are largely insulated from short-term fluctuations in chip availability by their cloud providers. Most companies tap into one or more hyperscaler for processing capacity, according to Shane Rau, research VP, computing semiconductors at IDC.

While companies looking to purchase GPUs for on-prem capabilities may find themselves on a waitlist, shortage concerns are most acute for the vendors training models, including cloud service providers, Rau said in an email.

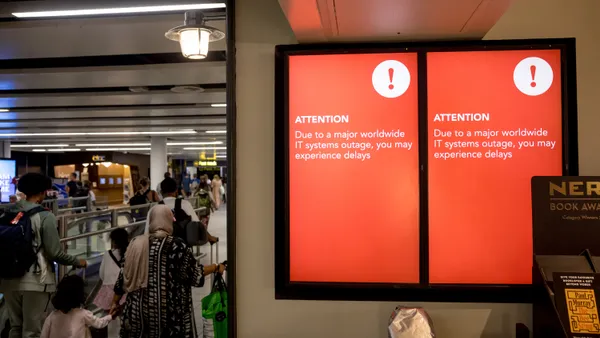

But supply chain bottlenecks can have a downstream impact on the enterprise.

“If you can't get the right servers or PCs from Dell or the right cloud services on time, then it becomes your problem too,” Glenn O’Donnell, VP and research director at Forrester, told CIO Dive.

However, CIOs aren’t typically in the habit of buying chips directly from manufacturers like Nvidia, Intel and Samsung. In addition to provisioning compute via cloud, they procure processing power indirectly, through hardware and products sold by Dell, HP, Microsoft and other vendors that have partnerships with chip manufacturers, O’Donnell said.

CIOs planning broad deployments should discuss capacity forecasts with their cloud providers now, Bret Greenstein, partner and generative AI leader at PwC, said in an email.

The cost of GPU compute may also come down as companies learn to balance workloads more efficiently, Greenstein said.

Cloud cushions the blow

The generative AI rush driving GPU demand is analogous to the hybrid workforce shift that fueled enterprise device purchasing. Supply concerns are likely to linger through the next year, as more manufacturing capacity gradually comes online, spurred in part by the CHIPS and Science Act, O’Donnell said.

To brace for the surge, hyperscalers AWS, Microsoft, Google Cloud and Oracle have all solidified partnerships with GPU supplier Nvidia and raced to build out data center capacity with AI-optimized servers.

Nvidia, which saw revenues soar in the last year, said it’s managing the constraints.

The chipmaker increased production throughout 2023 and intends to continue doing so this year, according to the company. “We expect customers to see improving product availability throughout the year,” a Nvidia spokesperson said.

To forestall a shortfall, the three largest cloud providers began engineering AI-optimized processors prior to ChatGPT. In November, AWS unveiled the latest version of its Trainium and Inferentia chips and Microsoft introduced two proprietary AI chips. Google Cloud deployed an upgrade of its tensor processing unit alternative to the GPU in August.

“If there’s a shortage, the public cloud providers are going to see it first,” Sid Nag, VP analyst at Gartner, said.

The problem isn’t chip design, it’s manufacturing capacity.

“Google, Amazon and Apple don’t manufacture chips. Even Nvidia doesn’t manufacture these chips. Relatively few companies actually make them,” O’Donnell said, pointing to TSMC, Samsung and GlobalFoundries as key industry players.

Intel, which announced an AI-optimized chip for PCs in December, is also ramping up production, according to O’Donnell.

So far, enterprise customers and CIOs looking to deploy generative AI tools aren’t feeling the pinch.

“For most of my clients, there's no GPU shortage,” Erik Brown, senior partner, Product Experience and Engineering Lab at West Monroe, told CIO Dive. “My clients are trying to figure out how to use the cloud providers effectively and maybe looking at startups like CoreWeave that have specific GPU-tuned cloud offerings.”

Smaller language models

The massive size of the foundation models that power generative AI and the compute needed for initial training is a major culprit in GPU depletion. Smaller, more contained models, such as Microsoft’s Phi suite, released earlier this month, should help reduce GPU consumption, according to several industry analysts.

Gartner foresees LLMs that are small in size, more curated, restricted to certain users and that may not depend on public cloud, Nag said, pointing to BloombergGPT, a purpose-built LLM for finance launched in March.

“These models can run in a distributed cloud or in apps that run on premises,” said Nag. “The amount of horsepower needed to support that sort of model won’t be as high from a compute perspective.”

CIOs will also get smarter about how they source GPUs and implement LLM tools as the technology matures.

Training a model, for example, isn’t necessary for many enterprise applications and not all AI operations require GPUs. Inferencing, which involves feeding a model data, queries and task-specific prompts, can be done with high-powered CPUs.

“The vast majority of clients that come to us think they need to train a model, but they don’t,” said Brown. “It’s like moving an egg in a semi-trailer.”

Many use cases simply require skilled prompt engineering and clear guidance on what an off-the-shelf model can and can’t do. Fine tuning, which does involve changing some parameters, can be trickier but has a much narrower scope than training, Brown said.

As CIOs and enterprises get smarter about aligning the solution to the problem set, the industry will see less reliance on GPU resources.

“A lot of people see a shortage as a mechanism that might stifle innovation,” Brown said. “I think it will drive it. I think it'll make CIOs look at how they can be more effective in using the right tool for the right job right now.”

Once CIOs begin to feel the constraints of a GPU shortage, there’s no magic remedy. One option is to wait until supply improves, O’Donnell said. Otherwise, CIOs should be prepared to find substitute products, pay more or temper their ambitions.

“You may want to take on 20 different AI projects,” O’Donnell said. “But maybe you’re only going to be able to do three.”