Eye-popping data has painted a bleak picture of IT leaders’ ability to run successful AI pilots.

MIT found 95% of generative AI pilots fail despite ambitious enterprise expectations of rapid technology deployment. In a McKinsey survey, nearly 66% of respondents said their companies had not yet begun scaling AI enterprisewide. Indeed, many companies have been stuck in an AI pilot purgatory, some hitting a wall with generative AI last year.

To Bret Greenstein, chief AI officer at consultancy West Monroe, companies that can’t successfully get past the pilot stage can generally be sorted into one of four groups:

- those that tried to scale without a transformation plan

- those with IT teams that did not engage other departments

- those with employees resistant to AI

- those that didn’t communicate or show the potential for value creation

Similar profiles have materialized during previous technological shifts such as the early days of cloud. Today, there’s one key difference.

“AI touches every role,” Greenstein said.

Avoiding failure

Responsibility for solving AI pilots lands on technology teams, as complex data processing requirements block broad adoption and data governance moves to the top of priority lists. Technology chiefs must also solve data security before scaling can begin. But technical issues aren’t the only, nor are they necessarily the biggest, obstacles to getting through an AI pilot.

Success relies on users across all departments.

“You need business every step of the way, especially when it comes to testing,” said Greg Beltzer, Salesforce’s chief customer officer for AI and Agentforce. “... It's not your traditional DevOps model.”

At travel platform Engine, executives are mindful about how they talk about the “why” behind its AI tools, said Demetri Salvaggio, VP of customer experience and operations. The company has a long-standing AI partnership with Salesforce and was one of its first Agentforce platform customers. A culture of experimentation allowed the company to buck the trend of pilot stagnation.

Transparency and openness helped keep Engine out of Greenstein’s first three groups, as leaders were able to engage employees and quell early fears about job replacement. Joshua Stern, director of GTM systems at Engine, noted that creating a “psychological safety net” around AI encourages employees to play around with agents and share their discoveries with each other.

His team built Engine’s customer support agent, Eva, in 12 days. From a perspective with that much interest and technical fluency, Stern said, it’s easy to assume that everyone will be as welcoming and excited about the technology as the team is. But that’s not the case.

“They’re a little bit more afraid of it,” Stern said of employees outside the technology department “They're a little bit more sensitive to using it. You have to create that safety net where they see their peers, their colleagues doing cool stuff with it, and they’ll want to do the same.”

Greenstein’s fourth segment — companies failing to communicate the potential for value — seems easily avoided. But in an enterprise setting, creating value is easier said than done. In fact, MIT's 95% failure statistic actually stems from a data point that found only 5% of AI pilot programs in the study achieved rapid revenue acceleration.

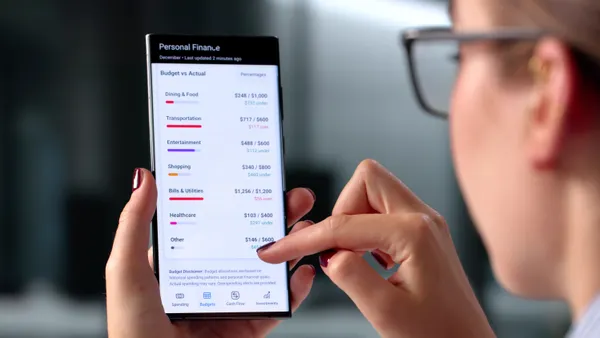

Part of that gap is due to a lack of analysis tools, Beltzer said. If the success metric is time saved per day per employee, for example, that’s difficult to prove. Salesforce eventually developed an observability tool for Agentforce, which Salvaggio said helped Engine optimize Eva. But failure to create value can also stem from putting AI on top of a faulty foundation, no matter how much return the agent promises.

“Whatever process you're trying to automate or make more efficient needs to be a pretty good process. I haven't seen AI actually fix a lot of bad processes,” Beltzer said.

Don’t boil the ocean

Amid enterprise AI deployment efforts, Greenstein, Stern and Salvaggio cautioned against trying to do everything at once. A failed pilot could reflect the non-AI process underneath, as Beltzer said. Starting small can help identify those cases.

“If you try to do everything all together all at once, you won't be able to measure effectiveness, and you will never get anything off the ground,” added Stern.

A company Greenstein worked with wanted to do dozens of things with AI, he said. His advice was to pick five to start, because all the processes used the same data sources and some of the same skills. Working out the kinks with a smaller sample can help get to scale.

“When you do that, you'll find that the dependencies have been resolved for many of the others. So, those will be easier and you do them as second wave, third wave things,” Greenstein said.

Although Engine did make it out of the pilot phase, it isn’t done scaling. Stern said the company is finding new use cases for agentic AI every day, and that’s part of the plan.

“Go after a small use case," Salvaggio agreed. “Take those learnings; get a win — which also helps the team, which also helps everyone that's rallying around it.”