Dive Brief

- By 2023, 75% of large companies will retain specialists in AI forensics, privacy and customer trust specialists in a bid to stave off brand and reputation risk, according to a Gartner report.

- Open source and commercial products are already available to assess AI bias, but larger enterprises are projected to bring aboard specialists to validate products in-house during development and deployment of AI-driven technologies.

- "While the number of organizations hiring ML forensic and ethics investigators remains small today, that number will accelerate in the next five years," said Jim Hare, research vice president at Gartner, in the report.

Dive Insight

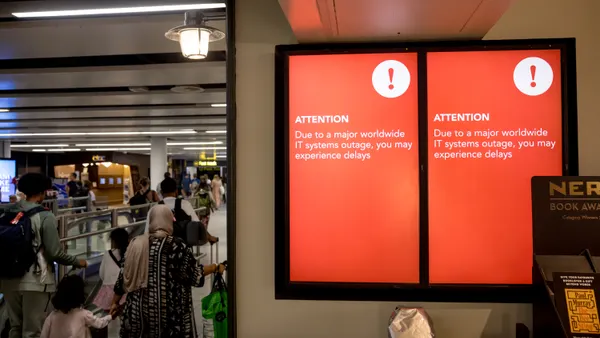

While data breaches are keeping executives up at night, organizations are also concerned about the reputation risk of unethical behavior in their AI platforms, especially racial and gender bias.

Recently, Amazon faced backlash from civil liberties groups over its decision to continue selling facial recognition technology to law enforcement agencies and governments.

The groups, including the American Civil Liberties Union expressed concern over implicit bias in the algorithms and the targeting of communities of color. Similar bias was exposed in a study from Georgia Tech University. Researchers found technology used in autonomous vehicles (AVs) which had trouble detecting pedestrians with darker skin.

Six in every 10 CIOs started their year preparing for new risks brought on by artificial intelligence, machine learning, and data privacy and ethics, according to an IDC report. Amid this reality, companies will continue to see the benefit in AI behavior forensic experts, Gartner projects.

"New tools and skills are needed to help organizations identify these and other potential sources of bias, build more trust in using AI models, and reduce corporate brand and reputation risk," said Jim Hare, research vice president at Gartner, in a press release. "More and more data and analytics leaders and chief data officers (CDOs) are hiring ML forensic and ethics investigators."

The initial risk for companies lies in having to rebuild technologies from the ground up, said Rumman Chowdhury, global lead for responsible AI at Accenture Applied Intelligence, speaking at an event in March. But the peril goes beyond sunken R&D costs.

"If something goes wrong you will have to undergo significant overhauls of your system," said Chowdhury. "At worse you will actually have irreparable harm to your company, your reputation and legal liability."