UPDATE: Aug. 11, 2023: Zoom updated its terms and conditions Friday following feedback related to the company's use of customer data to train AI products. The terms now reflect that Zoom will not use any customer data — including audio, video, chat, screen-sharing, attachments or other communications, such as poll results, whiteboard and reactions — to train Zoom’s or third-party AI models.

The company also revamped the sections related to training machine learning and AI models, section 10.2 and section 10.4. The terms now say the company can access customer content for legal, security and safety purposes as well as to "perform our obligations and provide the services."

Zoom is in hot water after under-the-radar changes to its terms and conditions in March allowed the company broad control over user data for its AI projects.

Concerns stem from what Zoom says it will do with customer and service-generated data, which includes the telemetry, product usage and diagnostic data sets of end users, and what its policy language actually allows.

Zoom said it reserves the right to disclose, modify, reproduce and create derivative works from service-generated data for a number of reasons, including to support machine learning and artificial intelligence, according to section 10.2 of the company’s terms and conditions. The terms include "for the purposes of training and tuning of algorithms and models."

Zoom says it can do the same thing with customer content, which includes uploaded content, files, documents, transcripts, analytics and visual displays, in section 10.4.

Critics characterized the policy as an invasion of privacy and questioned Zoom’s opt-out options.

The company responded to backlash by adding a note Monday under the aforementioned sections which said, “Notwithstanding the above, Zoom will not use audio, video or chat Customer Content to train our artificial intelligence models without your consent.”

Customer consent and Zoom’s intentions were echoed throughout the blog post, which accompanied the updated terms Monday. The company said its intent with section 10.2 was to establish its ownership over service-generated data. Section 10.4 was intended to ensure the company had a license to "deliver value-added services" without questions of usage rights.

“Zoom customers decide whether to enable generative AI features, and separately whether to share customer content with Zoom for product improvement purposes,” a Zoom spokesperson told CIO Dive in an email. “We’ve updated our terms of service to further confirm that we will not use audio, video, or chat customer content to train our artificial intelligence models without your consent.”

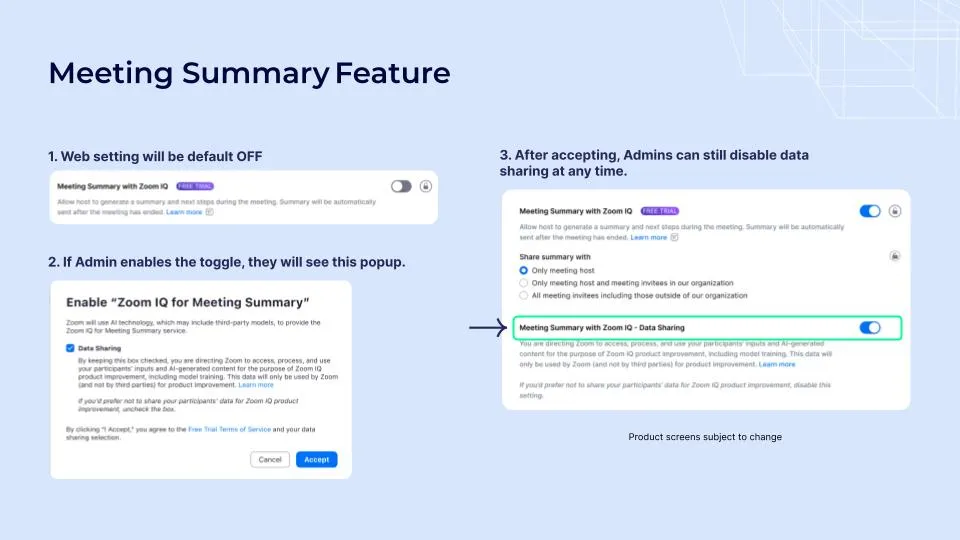

The blog post addressed concerns related to the opt-out process, highlighting in a screenshot of the Zoom IQ for Meeting Summary user interface where users can accept or deny the company access to content for model training. Zoom also included a photo of the interface where users can disable data sharing at any time even if they initially accept.

Public disapproval of Zoom’s updated terms reflect the persistent tension between user privacy expectations, but the terms also signal the increasing need for vendors to organically grow AI technologies, according to Chris Hart, partner and co-chair of privacy and data security at law firm Foley Hoag.

AI is a critical part of Zoom's overall strategy. The company has beefed up its capabilities while partnering with generative AI companies such as Anthropic and OpenAI.

With an enterprise business segment growing 13% year-over-year and representing 57% of total revenue in Q1 2024, Zoom is among a host of vendors looking to find a middle ground to use its customer data to boost monetized AI tools and future projects without drawing criticisms.

“I’m sure we will see businesses try to find ways to strike the right balance between obtaining and using data from users while also reassuring individuals about privacy protections,” Hart said in an email. “This will of course be shaped by a rapidly evolving regulatory environment.”

OpenAI responded to data privacy concerns raised by the Italian Supervisory Authority by adding more guardrails and user controls in April, but concerns from agencies and industry watchers have persisted. The Federal Trade Commission is currently probing the company about its data practices.

Even with the risks, most customers will be acquiring or purchasing generative AI applications and tools as opposed to developing the application themselves, said Bill Wong, principal research director at Info-Tech Research Group.

“Some vendors are keeping the data and openly saying it will be used to train the model for others to use,” Wong said in an email. “Customers should challenge this.”