Dive Brief:

- Soaring demand for AI infrastructure helped Nvidia realize record revenues of $57 billion for the third quarter, up 22% from Q2 and 62% year over year, according to the company’s Q3 2026 earnings call for the period ending Oct. 26.

- Nvidia said its cloud graphics processing units are sold out as the company’s revenue in the data center segment reached $51.2 billion, a 66% increase year over year.

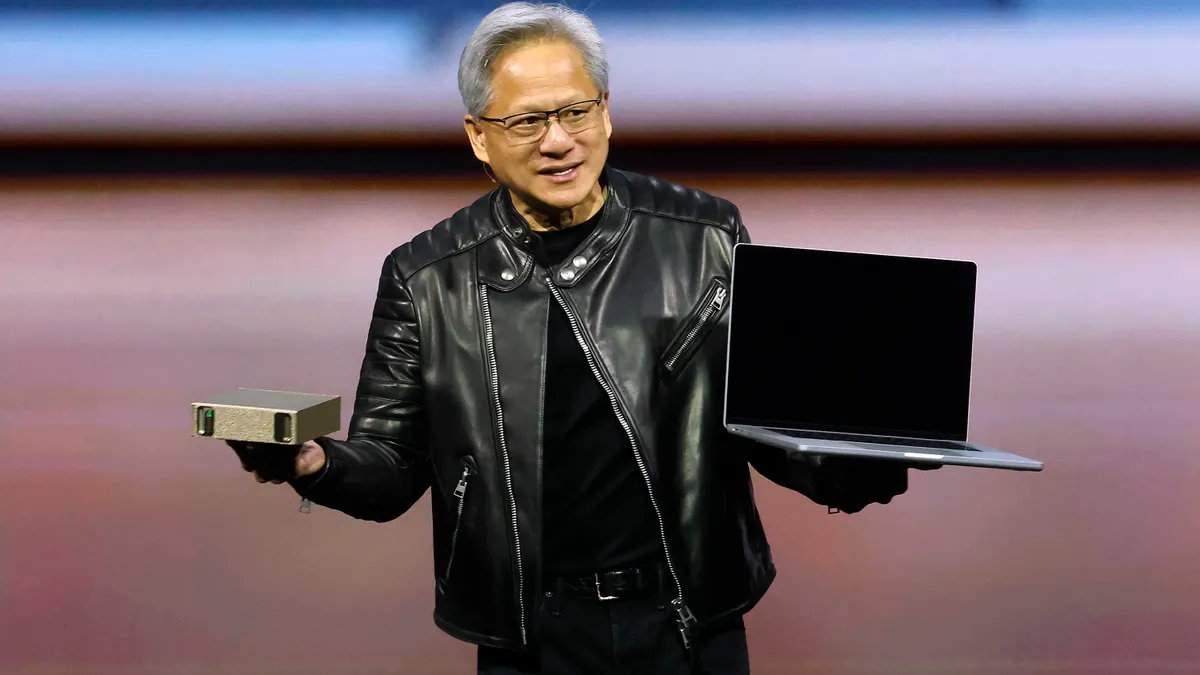

- “Compute demand keeps accelerating and compounding across training and inference – each growing exponentially,” Jensen Huang, CEO and founder of Nvidia, said in a Q3 results announcement. “The AI ecosystem is scaling fast – with more new foundation model makers, more AI startups, across more industries, and in more countries.”

Dive Insight:

Hyperscalers are spending billions on AI infrastructure to ensure they get in on the ground floor of the AI era, even as enterprises have yet to realize the full benefits of the technology.

Accelerating compute capabilities and scaling generative AI across hypercaler workloads is a significant contributor to Nvidia’s “long-term opportunity,” said Nvidia EVP and CFO Colette Kress.

Google Cloud, Microsoft and AWS have committed billions in capital investments in AI infrastructure, boosting Nvidia’s expansion. Nvidia struck partnerships with industry leaders including Google, Microsoft and Oracle to build out U.S. AI infrastructure, which will be powered by thousands of Nvidia GPUs, according to the earnings announcement.

Aggressive compute spend by foundation model builders — including Anthropic, Mistral and OpenAI — is another growth pillar for the company, Kress shared during the call.

“We have evolved over the past 25 years from a gaming GPU company to now an AI data center infrastructure company,” Kress said.

Hyperscalers are spending big on AI infrastructure because “they know they have to,” said Daniel Newman, CEO at The Futurum Group.

“2026 is sold out,” Newman said in an email to CIO Dive. “And 2027 demand will follow suit.”

It’s undeniable that compute demand is strong, said Gartner VP Analyst Gaurav Gupta. However, for enterprises to start seeing a return on their AI investments, they will have to start using models for inferencing rather than training.

Less than half of IT leaders said their AI projects were profitable in 2024, while 33% broke even and 14% recorded losses, according to IBM research. Still, most businesses anticipate AI driven cost savings to occur within the next three years.

“For ROI to come, inference has to pick up,” Gupta said. “It’ll take time for the industry on the street to see revenue pick up.”

Huang acknowledged market talk of an AI bubble, but said “we see something very different” as the transition to accelerated computing serves as a critical foundation for AI initiatives, he said during the earnings call.

The industry has yet to see the beginning of the “enterprise AI wave,” according to Newman.

“We expect the forecasts for AI infrastructure [will continue] to tick up,” Newman said.

PC maker Lenovo in its Q2 earnings call flagged a shift already happening in the enterprise AI market, as companies move from from AI training in public cloud to AI inferencing on-premises. Lenovo saw 15% growth in revenue year over year to $20.5 billion. Its AI-related revenue accounted for 30% of the company’s total Q2 revenue, up 13 percentage points year over year.

Increased AI revenues were driven by double-digit growth in AI servers and triple-digit growth in AI PCs, smartphones and services, according to Lenovo’s Q2 earnings announcement Thursday.

“We are seeing today’s AI era unfold along a clear path,” Lenovo Chairman and CEO Yuanqing Yang said during the call. “Now as large language models become increasingly commoditized, user priorities are shifting towards personalization and the private domain.”