Dive Brief:

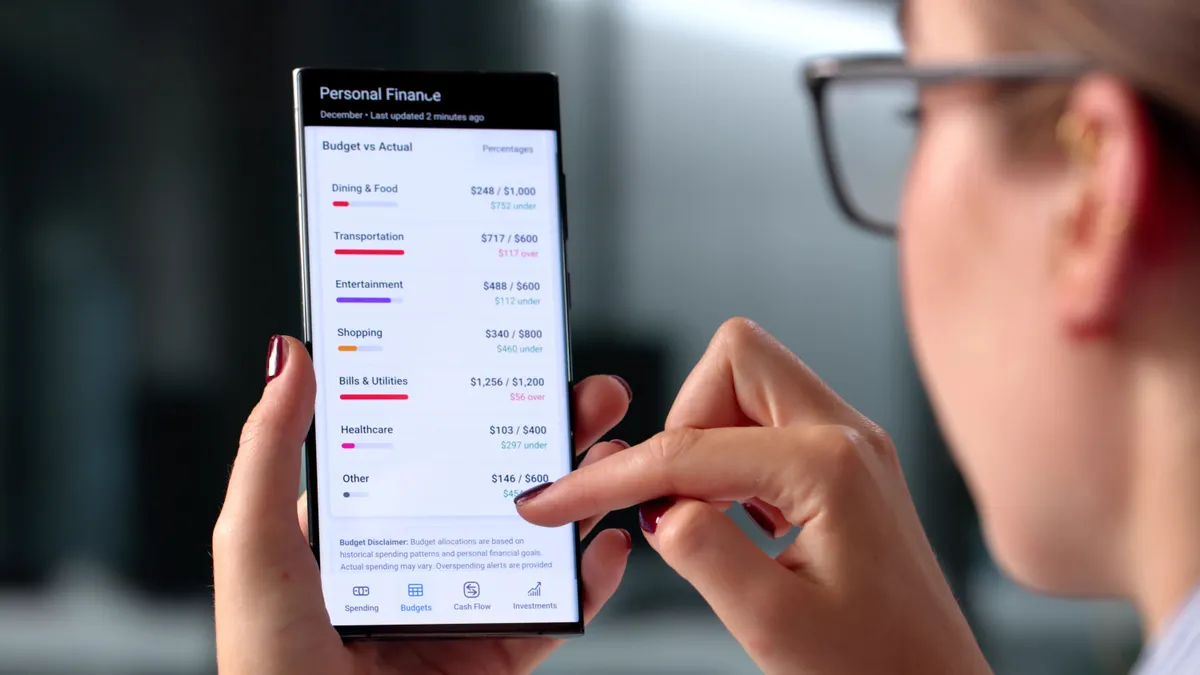

- Enterprises created AI guidelines and policies, but not everyone is following the rules, according to research commissioned by Nitro. The software company analyzed more than 1,000 responses from C-suite leaders and employees.

- More than two-thirds of C-suite executives admitted using unapproved AI tools at work in the past three months. Of those, more than one-third used unapproved tools at least five times during the last quarter. More than half rated security and compliance as "challenging" or "extremely challenging" when implementing AI.

- Employees also ignore established standards around AI tools. One in three workers confessed to using AI to process confidential company information.

Dive Insight:

AI sprawl is taking over the workplace as the rush to adopt the technology leads to a proliferation of tools and platforms without a unifying strategy.

Shadow AI is one of the driving forces behind the trend, bringing with it a set of security implications. Around 20% of organizations that suffered a breach traced it back to shadow AI, with the global average cost surpassing $4 million, according to an IBM survey.

Executives and employees are guilty of turning to unauthorized AI tools. Some see it as a way to circumvent arduous approval processes that stand in the way of speed.

“If your competitors are using AI to accelerate content production right now, waiting for the approved stack means losing ground every day,” Cormac Whelan, CEO at Nitro, told CIO Dive in an email. “They’ve made a calculated decision that asking for forgiveness beats explaining why they sat on the sidelines waiting for compliance.”

C-suite leaders have a similar inclination, surveys like Nitro's show. CalypsoAI, for example, found that more than two-thirds admitting they’d use AI to make their job easier even if it conflicted with internal policies.

Shadow AI can also be a symptom of poor tooling. After all, three-quarters of employees say they abandon AI tools mid-task, usually due to accuracy concerns, according to an Udacity survey.

Tools that aren’t useful don’t get used, wasting valuable resources amid runaway AI costs.

“It's a wake-up call,” Whelan said in an email. “Adoption is earned, not mandated.”